Your Data Has Power

How do I reduce my operational inefficiencies and reduce costs by avoiding numerous recirculations at my ultrafiltration process step?

For this pharmaceutical company, the data was plentiful. They had material and process data spread across systems like Oracle, DeltaV, and GLIMS, and there were over 100 parameters controlling the process. They didn’t lack data. They lacked context.

To answer the question, they used Aizon Unify to ingest, standardize, analyze, and monitor data in a GxP complaint platform. Of course, one question leads to more, so they ultimately ended up looking for the answer to two additional questions:

Which is the Critical Process Parameter (CPP) that has the biggest impact on the polarimetry out of the Ultrafiltration unit?

Then, what is the CPP set point to obtain the optimal polarimetry to avoid recirculation?

Finding the CPP couldn’t be done without first understanding the data and the interrelated process variables, then applying AI to predict values, within approved ranges, that would eliminate the recirculation process.

They got to a point where recirculations are no longer necessary and they estimated a 61% reduction in the total number of runs and a 53% boost in process effectiveness.

How do I improve the yield of my downstream bioprocess?

This biotech site produces a rare disease medicine where the value per gram can be hundreds of thousands of dollars, making it important to optimize for every gram per batch. This organization had a lot of data and fairly sophisticated processes, but they relied heavily on manual data entry and spreadsheets, making it difficult and time consuming to do any advanced analytics. On top of that, they were operating on a trial and error basis to improve batch performance.

First step? You guessed it… data ingestion.

They identified five sources that were relevant to their downstream bioprocess - material genealogy, electronic batch records, process data (Critical Process Parameters (CPPs)), ERP planning data, and LIMS data (Critical Quality Attributes (CQAs)).

They leveraged AI models based on Aizon’s PCA analysis, which when applied to their data, helped them identify 36 key variables (identifed from a pool of 1,000) that impacted their processes. To develop the predictive model with an artificial intelligence algorithm, Aizon used LIMS data from 104 different downstream runs that were identified as having meaningful data. Those were then used to train the AI model in Aizon’s GxP compliant environment.

They saw a 93% reduction in the time it takes to do one specific step. The digital process, leveraging the AI-based predictive models, simulates decisions, allowing the organization to see likely outcomes, and ultimately get the optimal results with lower risk and a higher degree of confidence.

How do I find (then address) inefficiencies in my process?

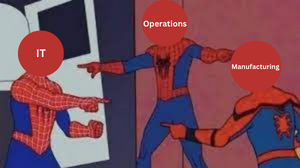

This large global insulin manufacture experienced production delays in an advanced packaging line - problems that were causing supply chain bottlenecks on a critical line. The kicker? They didn’t have any ideas as to what was causing the line to run behind. Add to that too many stops and starts, too many scraps, and it was an IT vs. Operations vs. Manufacturing finger-pointing battle.

Data was generated from multiple sources, including warehouse, temperature sensors, machine PLCs, transports (AGV), MES systems, ERPs and IT infrastructure. Furthermore, most of this data was highly siloed, making it difficult to find clean batch data from which to gain insights. The organization used Aizon Unify to ingest the data (are you sensing a theme?) and applied a contextualization model to link and relate data from disparate data sources. The data was cleaned, a PCA was performed, highlighting relevant factors for the best and worst batch performance.

From there, an AI model was produced and the platform was then configured to alert both the organization and its operators when the real OEE deviated from the prediction, and detect what kind of issue was affecting the batch performance and what they should look into to fix it. Anything from time of day to humidity was impacting the production line. By taking action based on this understanding, and Aizon’s predictive models, the organization increased OEE by 10%, as well as enabled them to identify the root cause of their problems.